The HPWREN team is encouraging users of its products, in this case camera images, to share their stories.

This contributed article is by Kinshuk Govil, CEO of Open Climate Tech, a non-profit organization focused on building open source technologies that have the potential to help mitigate the adverse impacts of climate change.

Lessons learned from operating a public wildfire detection system

April 30, 2021

Limiting the destruction caused by wildfires requires a coordinated effort across numerous projects. In the hope of supporting these efforts, Open Climate Tech, an all volunteer nonprofit, has built an automated early wildfire smoke detection system using images from HPWREN cameras. The main goal of the project is to detect fires earlier than the fatest existing methods in the region, typically individuals noticing and reporting the fire 15 minutes after ignition. Another goal of the project is to inform everyone for free about potential fire events in real-time via an intuitive website: https://openclimatetech.org/wildfirecheck. The system has been operating every day since May 2020 and has successfully detected multiple fire ignitions since then. The system has improved dramatically in this period largely due to helpful feedback from some regular users, such as some Forest Fire Lookout Association (FFLA) San Diego members and some local fire agency personnel. This article describes some of the techniques used by the system and some of the lessons learned from one year of continuous operation.

The core detection subsystem is based on one of the multiple AI computer vision techniques. Simpler techniques focus on determining whether or not an object is present in an image, while more complicated ones try to also determine the locations and/or motion of multiple instances of objects in images. This project chose an AI technique called classification that only focuses on determining the presence of objects (in this case smoke) because these types of models are faster, more accurate, and require less data. This project is able to determine the approximate location of smoke in the image using simpler alternatives described below.

Instead of researching and developing a fully custom AI model that could take years to perfect, this project uses the well known AI model, Inception v3, which has one of the best results in standard benchmarks for detecting objects in images. For best accuracy, the model has to be trained with images similar to the ones it will be checking. Therefore, for this project the Inception v3 model architecture was trained from scratch using approximately a hundred thousand unique image segments each of smoke and without smoke in natural landscapes. Collecting such a large number of smoke images would be impossible without access to the archive of hundreds of millions of images from HPWREN cameras taken every minute for the last few years. The interesting smoke images in this immense archive were identified by correlating the locations of known fires from fire agency databases with the HPWREN camera locations and checking the start times of these fires. Volunteers searched these images for visible smoke from the first 15 minutes of the fire, and they marked the precise location of the smoke within these images. These markers were used to crop image segments containing smoke to train the AI model. Non-smoke training images were collected by iteratively training and using the trained model to check for smoke in many images, and then selecting manually verified false-positives. After several iterations, this process led to a high-quality non-smoke training image set that is used today.

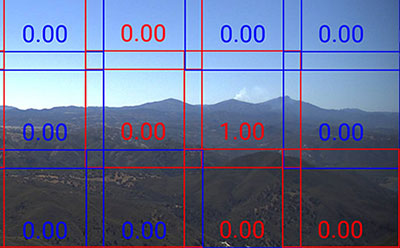

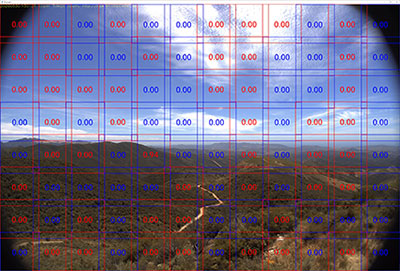

When using the trained model to check images for fire, each image is broken into 299x299 pixel square segments (the desired image size for Inception v3) that overlap slightly. Segmenting images into these squares provides a benefit of locating the fire within the image to one of the 299x299 pixel regions, overcoming one of the drawbacks of using the simpler AI model that only determines presence, not the location of objects. The model returns a value between 0 (no smoke) and 1 (likely smoke) for each square segment separately. A threshold value for fire is determined dynamically for each segment based on the maximum value seen in the last three days around the same time of day. This technique eliminates false positives due to repetitive daily events that occur near the same time each day, such as solar glare, solar reflection, fog, and haze.

This image illustrates how an image is subdivided into the segments that are evaluated individually by the AI model.

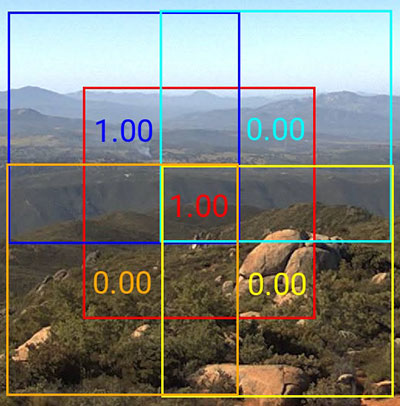

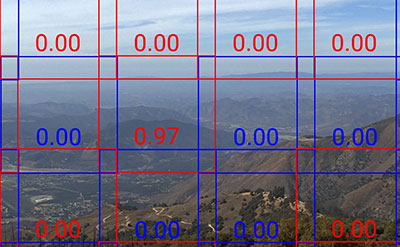

Sample false positive: Portion of an image without smoke that is scored incorrectly high by AI model

During the past twelve months of operation, multiple lessons have emerged. One of the main lessons is that users are less tolerant of false positives than they claim to be. This is likely because investigating multiple notifications that turn out to be false positives reduces the perceived urgency of investigating the next notification. Therefore, multiple additional filters were applied to reduce the false positives, and some of these filters also provide secondary benefits. One of the simplest filters is removing the top part of the image significantly above the horizon. This filter not only removes false positives triggered by cloud formations, but also speeds up image analysis because part of the image does not have to be analyzed. Another filter improves accuracy while further localizing the potential fire region. Once the main model determines a square segment contains smoke, then the same model is used to score four new square segments around the original square but shifted towards the four diagonal directions as illustrated in the image below. The event is ignored if none of these shifted squares score above 0.5, and the fire region is reduced to the intersection of all squares scoring more than 0.5.

Although false positives cannot be fully eliminated, the combination of these and other filters not mentioned above results in a very low false positive rate (20 per day across all 100 cameras, equivalent to a single alert per camera every five days).

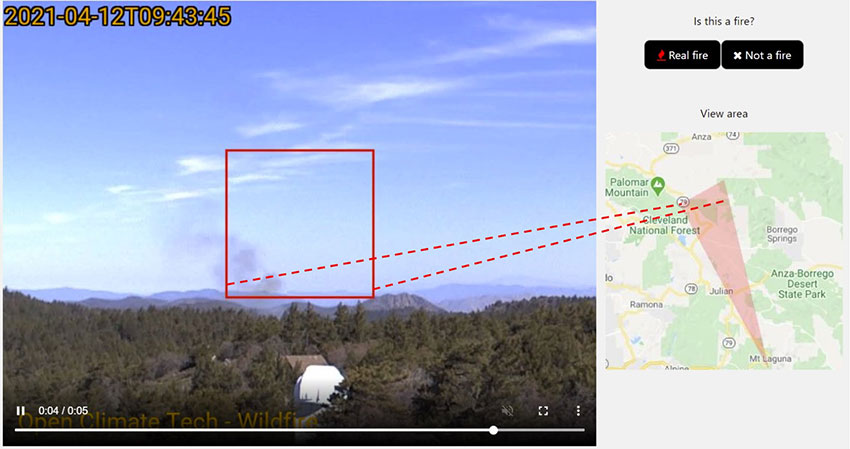

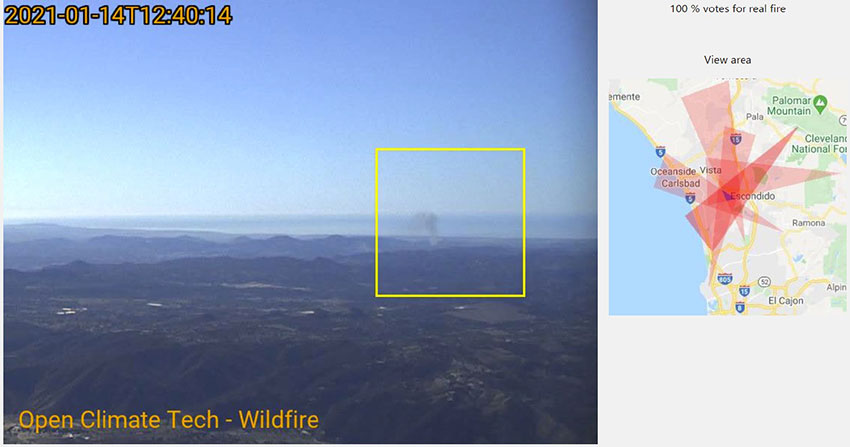

Another lesson learned from operating the site is that visualization of the events and usability of the site are equally important as the accuracy of the core detection algorithm. The primary goal for visualization is to allow people to quickly and easily validate whether an event is a real fire. Stitching together still images of the past few minutes into a time-lapse movie has proven very helpful in deciphering the motion of the true smoke from fires vs. random anomalies. A secondary goal is to geographically locate the fire. Gauging the range of distances from a 299x299 pixel vertical segment of an image is challenging due to terrain variability, but it is straight-forward to determine the horizontal direction of the fire given the horizontal field of view and the heading of the camera. The calculated fire heading is translated into a thin wedge pointing away from the camera location and extending 40 miles. The wedge is shaded on a map displayed next to the fire video as shown in the example below.

In many cases, cameras at different locations observe the same fire, and it is useful to merge the wedges onto the same map. This is done by checking for intersecting wedges in recent notifications, and shading all the intersecting wedges and highlighting the intersection area on the map. Besides pinpointing the location, multiple perspectives also increase confidence the event is a real fire, and they make it easier for website users to determine whether it is a fire.

Some users provided feedback to improve website usability beyond visualization of events. One of these was to notify users of new real-time events because users do not stare at the site continuously. Another notable issue was that some users are only interested in potential fires in geographical regions smaller than the entire region monitored by the system. To address both these concerns, the site implemented a preferences page where users can indicate their desired geographical region and their desire to be notified as new events are detected.

Overall, the experience of the last year has proven that such systems can be effective in early detection, and both the detection algorithm and the website usability are equally important. There have been over a dozen cases where the initial detection from the site was faster than the first report from a local fire agency. This system will never be better than people actively looking for smoke from fire towers, but it does operate every day and users are automatically alerted whenever a potential fire is detected. Systems like these are going to be more valuable in sparsely populated areas. Another interesting observation from one fire agency official was that the system is valuable as enhanced situation aware and post-fire documentation even when people have reported the fire before the system detected it.

Open Climate Tech would like to acknowledge FFLA San Diego and HPWREN groups as well as other volunteers without whom this project would not be possible. More volunteers are always welcome to join the non-profit. The project is also looking to expand to more cameras with operators willing to share real-time images for smoke detection processing. Please email info@openclimatetech.org if you wish to volunteer your time, or you can facilitate connecting with camera operators, or you want to discuss relevant ideas.